Extract from The Guardian

Senate committee told of potential for deepfakes or cloned robocalls of Anthony Albanese and Peter Dutton, which may not be illegal under current laws.

Mon 20 May 2024 18.50 AEST

Last modified on Mon 20 May 2024 19.28 AESTThe Australian Electoral Commission has said it expects AI-generated misinformation at the next federal election, potentially from overseas actors, but warned that it doesn’t have the tools to detect or deter it.

A parliamentary committee into artificial intelligence heard on Monday that the new technology could pose risks to “democracy itself”, with concerns voters might encounter deepfakes and voice clones of Anthony Albanese or Peter Dutton before the next poll.

The AEC commissioner, Tom Rogers, told the hearing the new technology had “amazing productivity benefits” but noted “widespread examples” in recent elections – in Pakistan, the United States, Indonesia and India – of deceptive content generated by artificial intelligence (AI).

“The AEC does not possess the legislative tools or internal technical capability to deter, detect or then adequately deal with false AI-generated content concerning the election process – such as content that covers where to vote, how to cast a formal vote and why the electoral process may not be secure or trustworthy,” Rogers told the hearing.

Numerous free, popular “generative AI” platforms such as ChatGPT and Dall-E allow users to create or manipulate text, images, video or audio with simple commands. Many applications of the technology are harmless or used for entertainment, but police, security agencies and online regulators have voiced concern the tools can be used for misinformation, abuse or crime.

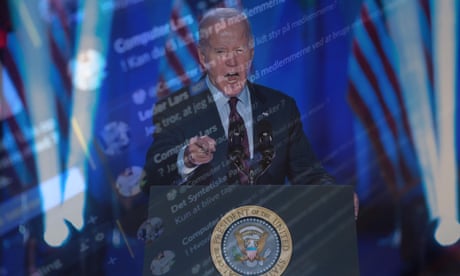

Rogers was asked by the independent senator David Pocock how likely it was that Australian elections would experience AI-generated misinformation, noting voice “clones” of the US president, Joe Biden, in robocalls in the American election cycle.

“We’re seeing increased use of those sorts of tactics in elections around the world,” Rogers said.

“I don’t think we’re going to be immune to that. So we could expect things like that to occur at the next election.”

The AEC boss said voice-cloned robocalls would not necessarily be illegal under current electoral legislation. He said the AEC’s “electoral toolkit is very constrained with what we can deal with”, but he did not agree with a suggestion from Pocock that AI content should be outlawed entirely in elections, saying some would be used in an “entirely lawful” way.

Rogers also noted warnings overseas that certain nation states may be using AI-generated content to confuse voters.

The federal government is consulting on a new code around the use of AI, including investigating mandatory “watermarks” on AI-generated content.

Rogers said he would support mandatory watermarking of AI-generated electoral content, as well as raising a national digital literacy campaign, stronger codes for tech platforms and even a code of conduct for political parties.

The commissioner said AI was “improving the quality of disinformation to make it more undetectable” and the AEC was working with tech companies on reducing the potential for harm from AI-generated content.

Pocock and Greens senator David Shoebridge voiced alarm at what they claimed was a lack of action from the Albanese government on threats to election integrity.

Shoebridge said the AEC’s evidence had raised concerns about deepfakes in coming elections – which he called “a clear and present danger” that could be outlawed – and said he was worried that parliament hadn’t done more.

“This could look like a video of the prime minister saying something he never said, or a robocall purporting to be your local MP – as long as the content has an authorisation, the AEC can’t touch it,” Shoebridge said.

“The lack of action to address this risk, especially compared to the actions taken by South Korea, the US and Europe, leaves us at risk from local and international bad actors who could very well steal an election if nothing changes.”

The inquiry chair, Labor senator Tony Sheldon, said the spread of AI-generated misinformation was a problem globally and could pose a risk to “democracy itself”.

“We heard today from the AEC that no jurisdiction in the world has yet figured out how to effectively restrict the spread of AI-generated disinformation and deepfakes in elections.”

Sheldon said AI technology could improve lives but also “poses significant risks to the dignity of work, our privacy and intellectual property rights, and our democracy itself”. The former union official also raised concerns about major companies using AI in hiring and workplace decisions.

The Australian human rights commissioner, Lorraine Finlay, said she was concerned about the privacy and security risks, noting biometric and facial recognition technology being deployed in retail stores.

She said AI could “enhance human rights but also undermine them”, raising concerns about automated decision-making in hiring processes or government institutions.

No comments:

Post a Comment